github.com

github.com

A nice cheatsheet/summary for software architecture

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

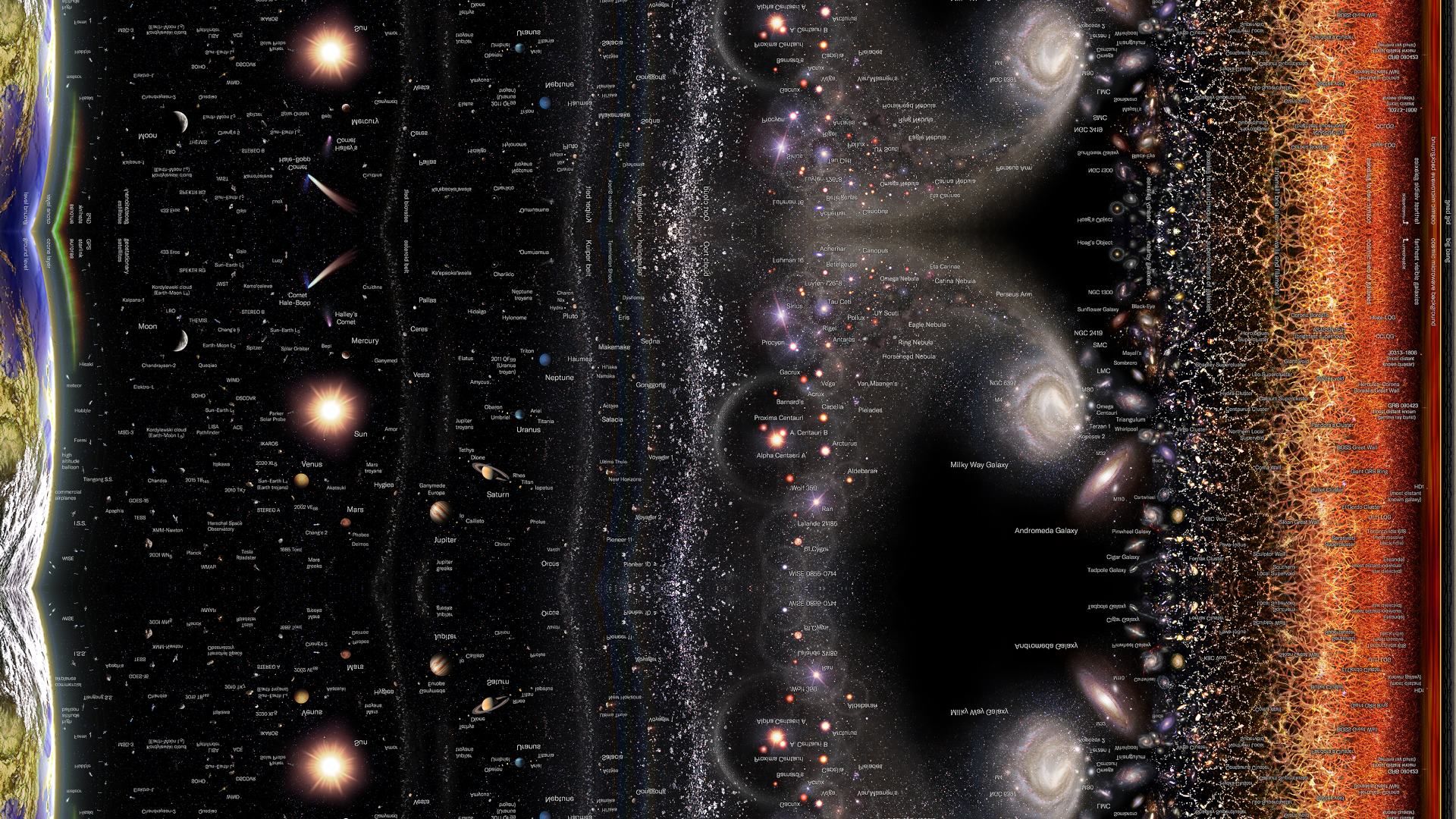

oh this is one of my wallpapers

i did a 1920x1080 version out of it by horizontally tiling 3 duplicates of it like this (i got the freely licensed version from wikimedia commons under https://creativecommons.org/licenses/by-sa/4.0/deed.en)

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

just use a community-lead or non-profit foundation lead distro: NixOS (better than silverblue/kinoite in all aspects they try to sell), Arch, or Debian.

For professional usage, you generally go Ubuntu, or some RHEL derivative.

skyheadlines.com

skyheadlines.com

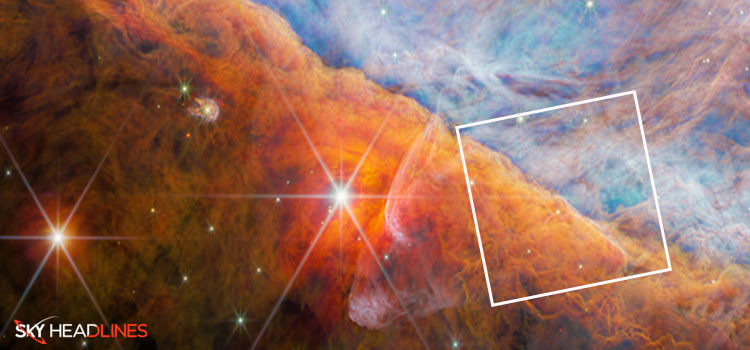

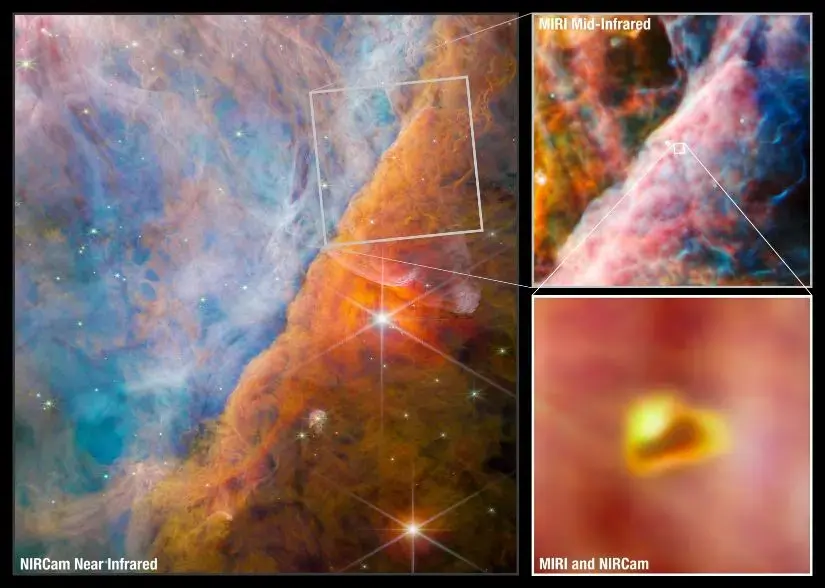

> For the first time ever in space, scientists discovered a novel carbon molecule known as methyl cation (CH3+). This molecule is significant because it promotes the synthesis of more complex carbon-based compounds.

Originally posted on https://emacs.ch/@yantar92/110571114222626270 > Please help collecting statistics to optimize Emacs GC defaults > > Many of us know that Emacs defaults for garbage collection are rather ancient and often cause singificant slowdowns. However, it is hard to know which alternative defaults will be better. > >Emacs devs need help from users to obtain real-world data about Emacs garbage collection. See the discussion in https://yhetil.org/emacs-devel/87v8j6t3i9.fsf@localhost/ > >Please install https://elpa.gnu.org/packages/emacs-gc-stats.html and send the generated statistics via email to emacs-gc-stats@gnu.org after several weeks.

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

That is ok. As far as I understand, this is how lemmy<->mastodon activity-pub integration is supposed to work.

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

Thanks for following us! That's surely a cool feature on the fediverse. Feel welcome and free to post and comment anything related to software engineering.

There is also !seresearch@lemmy.ml which @rahulgopinath@lemmy.ml moderates.

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

I think it will eventually be the best alternative, but I won't be using bcachefs for the following years... for anything server or professional related needs, I'd go with ZFS. In my personal systems, I use BTRFS including on NixOS.

> In May 2023 over 90,000 developers responded to our annual survey about how they learn and level up, which tools they're using, and which ones they want. > ... > This year, we went deep into AI/ML to capture how developers are thinking about it and using it in their workflows. Stack Overflow is investing heavily in enhancing the developer experience across our products, using AI and other technology, to get people to solutions faster.

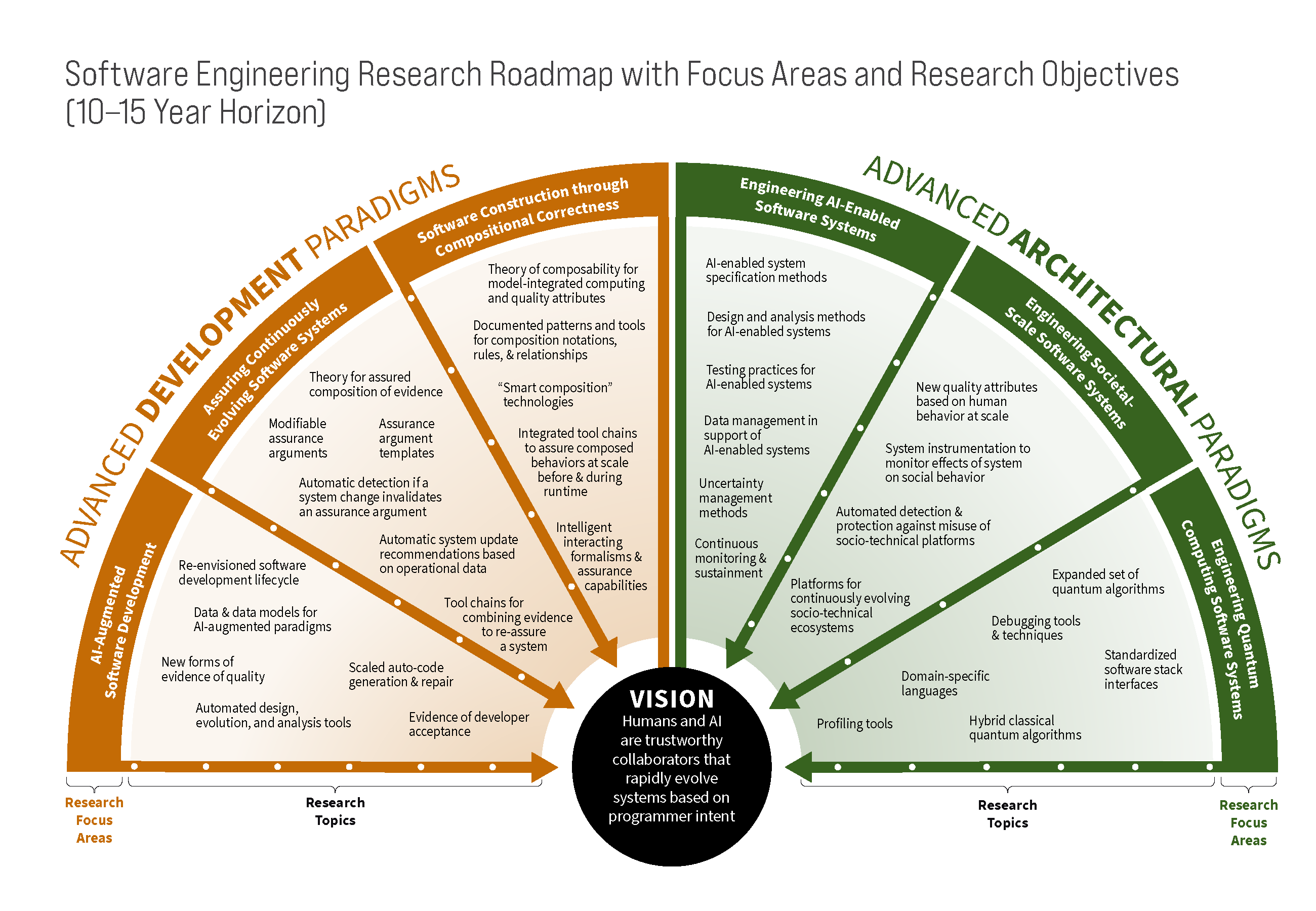

NOTE: This is primarily an invitation for the "[SEI and White House OSTP to Explore the Future of Software and AI Engineering](https://www.eventbrite.com/e/us-leadership-in-software-engineering-ai-engineering-workshop-registration-601593190427)", but there is this big section on the "future of software engineering" that is very interesting.  > As discussed in [Architecting the Future of Software Engineering: A Research and Development Roadmap](https://insights.sei.cmu.edu/blog/architecting-the-future-of-software-engineering-a-research-and-development-roadmap), the SEI developed six research focus areas in close collaboration with our advisory board and other leaders in the software engineering research community > ... > AI-Augmented Software Development: By shifting the attention of humans to the conceptual tasks that computers are not good at and eliminating human error from tasks where computers can help, AI will play an essential role in a new, multi-modal human-computer partnership... taking advantage of the data generated throughout the lifecycle > > Assuring Continuously Evolving Software Systems: ...generating error-free code, especially for trivial implementation tasks... generating surprising recommendations that may themselves create additional assurance concerns... develop a theory and practice of rapid and assured software evolution that enables efficient and bounded re-assurance of continuously evolving systems > > Software Construction through Compositional Correctness: ...unrealistic for any one person or group to understand the entire system... need to integrate (and continually re-integrate) software-reliant systems.. create methods and tools that enable the intelligent specification and enforcement of composition rules that allow (1) the creation of required behaviors (both functionality and quality attributes) and (2) the assurance of these behaviors at scale > > Engineering AI-enabled Software Systems: ...AI-enabled systems share many parallels with developing and sustaining conventional software-reliant systems. Many future systems will likely either contain AI-related components, including but not limited to LLMs, or will interface with other systems that execute capabilities using AI... focus on exploring which existing software engineering practices can reliably support the development of AI systems and the ability to assess their output, as well as identifying and augmenting software engineering techniques for specifying, architecting, designing, analyzing, deploying, and sustaining AI-enabled software systems > > Engineering Socio-Technical Systems: ... As generative AI makes rapid progress, these societal-scale software systems are also prone to abuse and misuse by AI-enabled bad actors via techniques such as chatbots imitating humans, deep fakes, and vhishing... leverage insights from such as the social sciences, as well as regulators and legal professionals to build and evolve societal-scale software systems that consider these challenges and attributes. > > Engineering Quantum Computing Software Systems: ...enable the programming of current quantum computers more easily and reliably and then enable increasing abstraction as larger, fully fault-tolerant quantum computing systems become available... create approaches that integrate different types of computational devices into predictable systems and a unified software development lifecycle.

> Conclusion: Four Areas for Improving Collaboration on ML-Enabled System Development > >Data scientists and software engineers are not the first to realize that interdisciplinary collaboration is challenging, but facilitating such collaboration has not been the focus of organizations developing ML-enabled systems. Our observations indicate that challenges to collaboration on such systems fall along three collaboration points: requirements and project planning, training data, and product-model integration. This post has highlighted our specific findings in these areas, but we see four broad areas for improving collaboration in the development of ML-enabled systems: >Communication: To combat problems arising from miscommunication, we advocate ML literacy for software engineers and managers, and likewise software engineering literacy for data scientists. > > Documentation: Practices for documenting model requirements, data expectations, and assured model qualities have yet to take root. Interface documentation already in use may provide a good starting point, but any approach must use a language understood by everyone involved in the development effort. > > Engineering: Project managers should ensure sufficient engineering capabilities for both ML and non-ML components and foster product and operations thinking. > > Process: The experimental, trial-and error process of ML model development does not naturally align with the traditional, more structured software process lifecycle. We advocate for further research on integrated process lifecycles for ML-enabled systems. More: https://conf.researchr.org/details/icse-2022/icse-2022-papers/153/Collaboration-Challenges-in-Building-ML-Enabled-Systems-Communication-Documentation PS: This one is from months ago, but still interesting

medium.com

medium.com

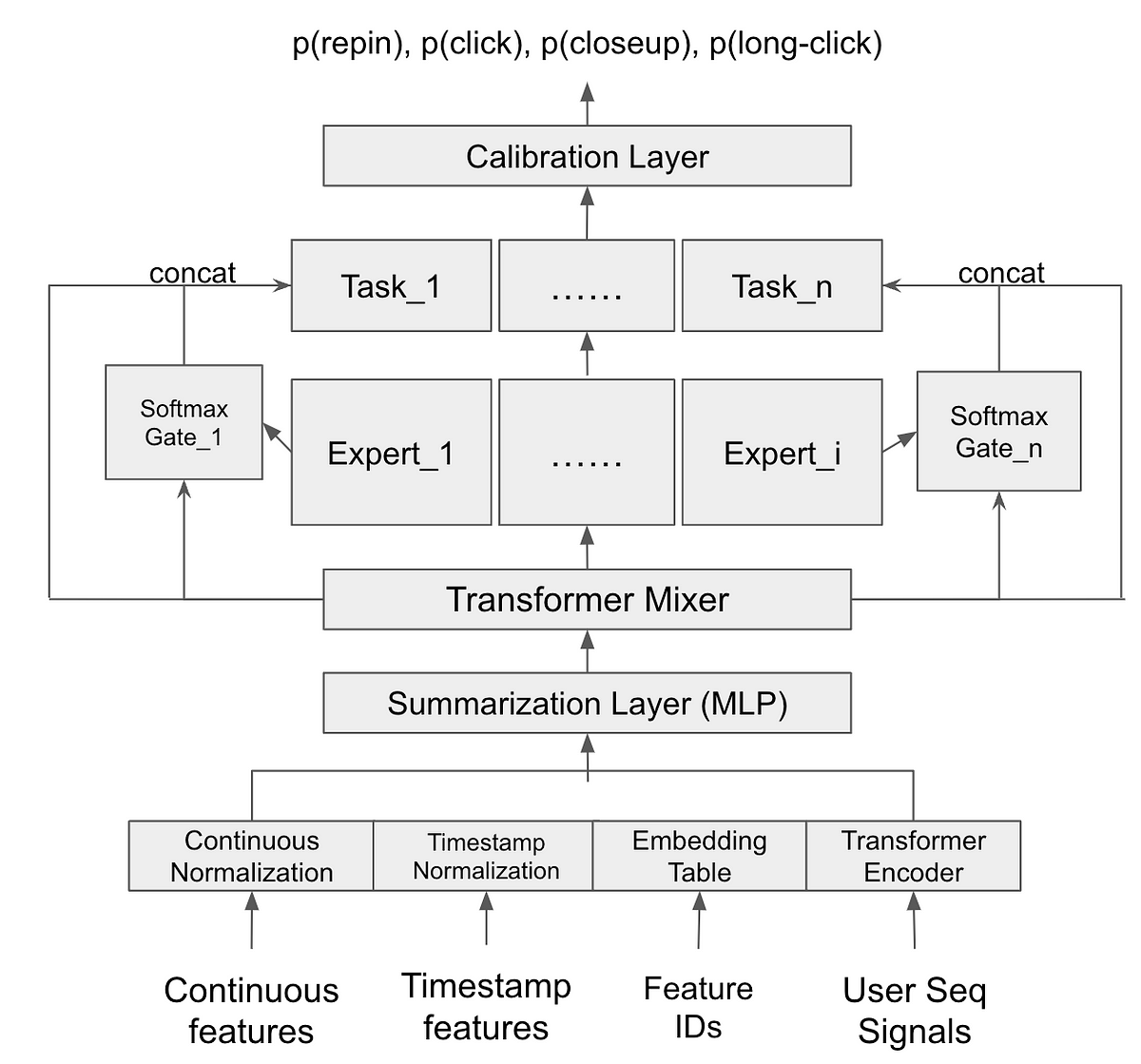

> At Pinterest, Closeup recommendations (aka Related Pins) is typically a feed of recommended content (primarily Pins) that we serve on any pin closeup. Closeup recommendations generate the largest amount of impressions among all recommendation surfaces at Pinterest and are uniquely critical for our users’ inspiration-to-realization journey. It’s important that we surface qualitative, relevant, and context-and-user-aware recommendations for people on Pinterest. See also: https://group.lt/post/46301

github.com

github.com

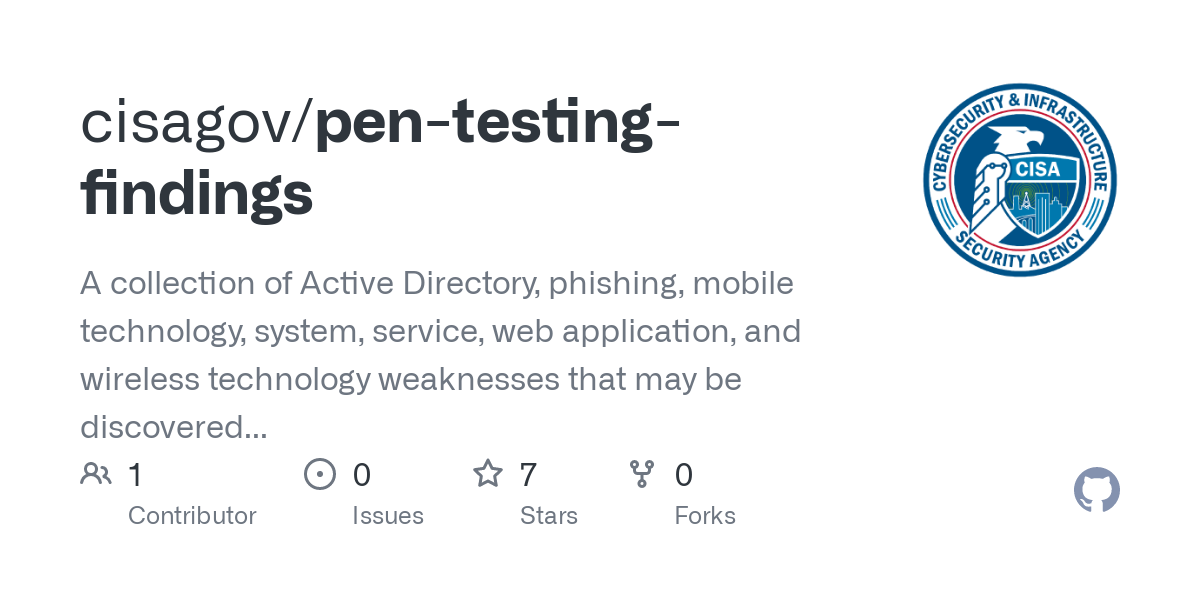

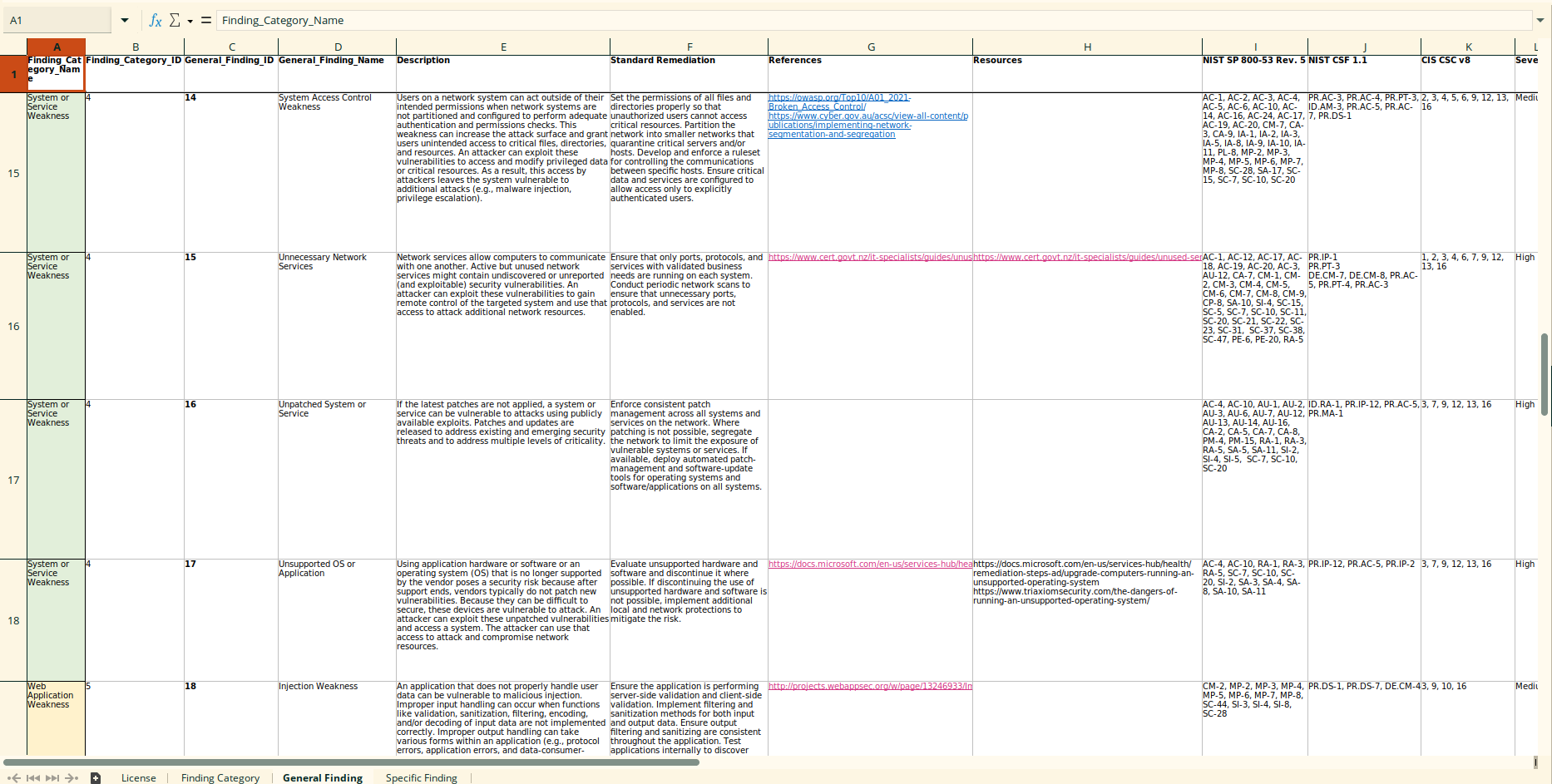

> The repository consists of three layers: > > 1. Finding Category layer lists the overarching categories > 2. General Finding layer lists high-level findings > 3. Specific Finding layer lists low-level findings Just an overview of the general findings  More: https://cmu-sei-podcasts.libsyn.com/a-penetration-testing-findings-repository

medium.com

medium.com

> 1. Introduction: What are Miho and its role as a brand; what problem are they solving; how they are solving the problem > 1. Competitive analysis > 1. Logo and Design Guidelines > 1. Information Architecture > 1. Iterations ... in the form of wireframes > 1. UI Designs: Homepage: appealing, informative, and engaging... users could easily know “what” and “for whom” this website is for; Product detail page; Gift Shop Page; Gift detail page; About Page;

medium.com

medium.com

> 1. [Affectiva's Emotion AI](https://www.affectiva.com/product/affectiva-media-analytics-for-qualitative-research) ... facial analysis and emotion recognition to understand user emotional responses > 2. [Ceros' Gemma](https://www.ceros.com/gemma/) ... generate new ideas, optimize existing designs, ... learn from your ideas and creative inputs, providing designers with personalized suggestions > 3. [A/B Tasty](https://www.abtasty.com/) ... UX designers to run A/B tests and optimize user experiences > 4. [Slickplan](https://slickplan.com/sitemap/visual-generator) ... sitemap generator and information architecture tool > 5. [SketchAR](https://sketchar.io/) ... creating accurate sketches and illustrations > 6. [Xtensio](https://xtensio.com/design/) ... user personas, journey maps, and other UX design deliverables > 7. [Voiceflow](https://www.voiceflow.com/) ... create voice-based applications and conversational experiences PS: Sounds like an ad, but still interesting to see tools in the wild that support AI for these conceptual phases in software engineering

uxdesign.cc

uxdesign.cc

> In today’s hybrid work landscape, meetings have become abundant, but unfortunately, many of them still suffer from inefficiency and ineffectiveness. Specifically, meetings aimed at generating ideas to address various challenges related to people, processes, or products encounter recurring issues. The lack of a clear goal in these meetings hinders active participation, and the organizer often dominates the conversation, resulting in a limited number of ideas that fail to fully solve the problem. Both the organizer and attendees are left feeling dissatisfied with the outcomes. > > ... > > The below sample agenda assumes that problem definition is clear. If that is not the case, hold a session prior to the ideation session to align on the problem. Tools such as interviewing, [Affinity Mapping](https://careerfoundry.com/en/blog/ux-design/affinity-map/), and developing [User Need statements](https://www.nngroup.com/articles/user-need-statements/) and ["How Might We"](https://www.nngroup.com/articles/how-might-we-questions/) questions can be useful in facilitating that discussion. > A sample agenda for ideation sessions > > Estimated time needed: 45–60 minutes > > 1. Introduction & ground rules (2 minutes) > - Share the agenda for the ideation session. > - Review any [ground rules or guidelines](https://www.ideou.com/blogs/inspiration/7-simple-rules-of-brainstorming) for the meeting. > - Allow time for attendees to ask questions or seek clarification. > > 2. Warm-up exercise (5–10 minutes) > - Conduct a warm-up exercise to foster creativity and build rapport among participants such as [30 Circles](https://www.ideo.com/blog/build-your-creative-confidence-thirty-circles-exercise) or [One Thing, Nine Ways](https://mbcollab.com/blog/six-creative-warmups-to-get-your-team-in-the-right-mindset). > - Choose an activity that aligns with the goals of the meeting and reflects the activities planned for the session. A quick Google search for “warm-up exercises design thinking” will return several potential activities. > > 3. Frame the problem (2 minutes) > - Share a single artifact (slide, Word Doc, section of text in whiteboard tool) that serves as a summary of the problem, providing participants with a reference point to anchor their thinking and revisit as needed throughout the session. > > 4. Guided ideation & dot voting (30 minutes) > - Select 1–2 guided ideation exercises such as [Crazy 8s](https://www.iamnotmypixels.com/how-to-use-crazy-8s-to-generate-design-ideas/), [Mash-up](https://www.mural.co/blog/remote-ideation-techniques), [Six Thinking Hats](https://www.debonogroup.com/services/core-programs/six-thinking-hats/). A quick Google search for “ideation techniques design thinking” will return tons of potential exercises. > - Provide clear instructions. > - Use [Dot Voting](https://lucidspark.com/blog/dot-voting) and/or [Prioritization Matrix](https://www.ibm.com/design/thinking/page/toolkit/activity/prioritization) to select the most promising ideas. > > 5. Next steps & closing remarks (5 minutes) > - Assign owners or champions for the selected ideas who will be responsible for driving their implementation (if not already known). > - Summarize the key decisions made and actions to be taken. > - Clarify any follow-up tasks or assignments. > - Express gratitude for participants’ contributions and conclude the meeting on a positive note.

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

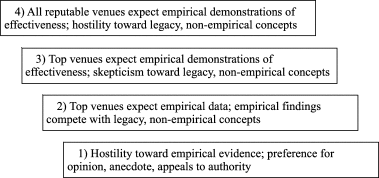

It is almost like the things like PMBOK... these things have no base in scientific method (empirically-based), having origins back to all the DOD needs

Also reminds me of this important research article "The two paradigms of software development research" posted here before https://group.lt/post/46119

The two categories of models use substantially different terminology. The Rational models tend to organize development activities into minor variations of requirements, analysis, design, coding and testing – here called Royce's Taxonomy because of their similarity to the Waterfall Model. All of the Empirical models deviate substantially from Royce's Taxonomy. Royce's Taxonomy – not any particular sequence – has been implicitly co-opted as the dominant software development process theory [5]. That is, many research articles, textbooks and standards assume:

- Virtually all software development activities can be divided into a small number of coherent, loosely-coupled categories.

- The categories are typically the same, regardless of the system under construction, project environment or who is doing the development.

- The categories approximate Royce's Taxonomy. ... Royce's Taxonomy is so ingrained as the dominant paradigm that it may be difficult to imagine a fundamentally different classification. However, good classification systems organize similar instances and help us make useful inferences [98]. Like a good system decomposition, a process model or theory should organize software development activities into categories that have high cohesion (activities within a category are highly related) and loose coupling (activities in different categories are loosely related) [99].

Royce's Taxonomy is a problematic classification because it does not organize like with like. Consider, for example, the design phase. Some design decisions are made by “analysts” during what appears to be “requirements elicitation”, while others are made by “designers” during a “design meeting”, while others are made by “programmers” while “coding” or even “testing.” This means the “design” category exhibits neither high cohesion nor loose coupling. Similarly, consider the “testing” phase. Some kinds of testing are often done by “programmers” during the ostensible “coding” phase (e.g. static code analysis, fixing compilation errors) while others often done by “analysts” during what appears to be “requirements elicitation” (e.g. acceptance testing). Unit testing, meanwhile, includes designing and coding the unit tests.

> Early galaxies' stars allowed light to travel freely by heating and ionizing intergalactic gas, clearing vast regions around them. > > Cave divers equipped with brilliant headlamps often explore cavities in rock less than a mile beneath our feet. It’s easy to be wholly unaware of these cave systems – even if you sit in a meadow above them – because the rock between you and the spelunkers prevents light from their headlamps from disturbing the idyllic afternoon. > > Apply this vision to the conditions in the early universe, but switch from a focus on rock to gas. Only a few hundred million years after the big bang, the cosmos was brimming with opaque hydrogen gas that trapped light at some wavelengths from stars and galaxies. Over the first billion years, the gas became fully transparent – allowing the light to travel freely. Researchers have long sought definitive evidence to explain this flip. > > New data from the James Webb Space Telescope recently pinpointed the answer using a set of galaxies that existed when the universe was only 900 million years old. Stars in these galaxies emitted enough light to ionize and heat the gas around them, forming huge, transparent “bubbles.” Eventually, those bubbles met and merged, leading to today’s clear and expansive views. More: https://eiger-jwst.github.io/index.html

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

it’s simply too difficult for average person to login and apply to every single instance that they’re interested in

Maybe there are some misunderstanding, but why would one want to apply to multiple instances at the same time? Just applying and being active on 1 good instance isn't enough?

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

Totally supportive. Great to have a wayland Rust implementation (and Rust increasing adoption by FOSS community); more specifically, smithay, which further than System76 is building upon, like projects by the community this WM for example https://github.com/MagmaWM/MagmaWM

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

Wow, this is truly good, as long ago I did read many delays on public healthcare services are due to no-shows. I liked the fact that with the information of who were more likely to no-show, UHP then contacted these people.

UHP was able to cut no-shows for patients who were highly likely to not to show up, by more than half. That patient population went from a dismal 15.63% show rate to a 39.77%. A dramatic increase. At the same time, patients in the moderate category improved from a 42.14% show rate to 50.22%.

Of course, this article sounds like an ad for eClinicalWorks, but interesting and very good application of AI regardless.

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

For now I mostly see work like these towards the construction phase. Little by little we automate the whole thing.

Just me doing a literature note:

Authors try to find faster algorithms for sorting sequences of 3-5 elements (as programs call them the most for larger sorts) with the computer's assembly instead of higher-level C, with possible instructions' combinations similar to a game of Go 10^700. After the instruction selection for adding to the algorithm, if the test output, given the current selected instructions, is different form the expected output, the model invalidates "the entire algorithm"? This way, ended up with algorithms for the "LLVM libc++ sorting library that were up to 70% faster for shorter sequences and about 1.7% faster for sequences exceeding 250,000 elements." Then they translated the assembly to C++ for LLVM libc++.

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

A cosmic hydrogen fog??? That sounds like an atmosphere, something that a space-dwelling creature from a sci-fi novel could develop in

not gonna lie, i lol

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

Well; darwin users, just as linux users, should also work on making packages available to their platforms as Nix is still in its adoption phase. There are many already. IIRC I, who never use MacOS, made some effort into making 1 or 2 packages (likely more) to build on darwin.

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

as Reddit now going to IPO. That happened to Twitter->Mastodon, can happen to Reddit->Lemmy as well.

We had seen it coming haha

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

Yeah, that's odd. But guess most projects are using the master branch anyway, e.g. https://github.com/MagmaWM/MagmaWM/blob/0ec02c92d63dd43d45fa3534f565fa587db506af/Cargo.toml#L20-L21

yuu

1 year ago

•

80%

yuu

1 year ago

•

80%

I can keep Firefox bleeding edge without having to worry that the package manager is also going to update the base system, giving me a broken next boot if I run rolling releases.

On Nix[OS], one can use multiple base Nixpkgs versions for specific packages one wants. What I have is e.g. 2 flakes nixpkgs, and nixpkgs-update. The first includes most packages including base system that I do not want to update regularly, while the last is for packages that I want to update more regularly like Web browser (security reasons, etc).

e.g.

- https://codeberg.org/yuuyin/yuunix/src/branch/main/flake.nix#L52-L77

- packages with pkgs (nixpkgs flake) https://codeberg.org/yuuyin/yuunix/src/branch/main/profile/packages.nix#L12-L26

- firefox with pkgs-update (nixpkgs-update flake) https://codeberg.org/yuuyin/yuunix/src/branch/main/profile/app/firefox.nix#L14-L16

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

When I was packaging Flatpaks, the greatest downside is

No built in package manager

There is a repo with shared dependencies, but it is very few. So needs to package all the dependencies... So, I personally am not interested in packaging for flatpak other than in very rare occasions... Nix and Guix are definitely better solutions (except the isolation aspect, which is not a feature, you need to do it manually), and one can use at many distros; Nix even on MacOS!

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

Not OP, but I originally xmonad because Haskell. I'm still on it because it is the only WM with a "contexts" feature/extension https://github.com/procrat/xmonad-contexts

sway devs discarded its feature request https://github.com/swaywm/sway/issues/4044

and I personally am more interest now on compositors/WMs that implement Smithay (Rust) instead of wlroots (in C). Specifically https://github.com/MagmaWM/MagmaWM, which I suppose will be easier to extend.

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

Some of them will detect if using virtualization. For example http://safeexambrowser.org/ by ETH Zurich

Ironically enough, it is free software https://github.com/SafeExamBrowser

newsroom.ucla.edu

newsroom.ucla.edu

The nature of an ultra-faint galaxy in the cosmic Dark Ages seen with JWST https://arxiv.org/abs/2210.15639

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

Using as backend for a very important Web app (with possible IoT applications in the very future also) for me which I already conceptualized, have some prototypes, etc---this is what motivates me. I feel, for this project in specific, I shall first learn the offficial Book (which I am) and have a play with the recommended libraries and the take of Rust on Nails. I also have many other interesting projects in mind, and want to contribute to e.g. Lemmy (I have many Rust projects git cloned, including it).

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

their work essentially go in the trash

They learned a lot in the process probably, that is the most important for them after all. But relying on API is risky, so always go HTML scrapping. The frontends are super useful for finding information already there without accessing the actual website. Always use Lemmy here for everything else.

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

Alas from at least 5 years the major labels block booked a lot of the LP printing presses for reissues, making it impossible for independent artists to get a pressing without roughly a 9 month lag.

I see. In the case I mentioned, what I see is underground artists with underground labels since the beginning now partnering up with new underground labels who got the rights someway for reissuing, or releasing unreleased content.

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

Ive made a good side career selling lp vinyl, given how poor the contemporary state of the music industry is. The worse the state of the economy the more these old artifacts become assets of economic significant.

Oh I've seen this and I'm glad this is a thing; not exactly reselling old vinyls, but the fact underground artists are able to release new/old stuff in vinyl format with wonderfully-production made with the heart instead of solely profit in mind.

I would hate to be a young performer.

I have come to known a very good young performer, but that workpath was just impossible. As I see it, doing music or art at this point is only good for individual/collective human expression; totally unfeasible doing a career over it as it is meaningless at this point.

www.zdnet.com

www.zdnet.com

cross-posted from: https://group.lt/post/65921 > Saving for the comparison with the next year

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

@indieterminacy@lemmy.ml set community to also accept comments from undetermined language, otherwise have to manually specify English every time.

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

The entertainment industry is nothing but capitalism, there is no emotion left. So that will not make difference other than capitalism consequences. Everything is produced by a few companies and producers, and performers just perform for the profit, fame, influence of it. AI is just the next step. For this mainstream industry in specific, I do not hold feelings. As true artists are long forgotten anyway.

I suppose it only makes sense to raise awareness on the benefits of the freely licensed software and services from the fediverse over the dangerous and unethical proprietary services in existence such as Reddit now going to IPO. That happened to Twitter->Mastodon, can happen to Reddit->Lemmy as well. I suppose as well that the users most likely to be open to the idea would be the free software, culture users to try it. Besides, an effort on content creation and content creators to make it an attractive place. What are your thoughts? What were the efforts so far? What are the challenges? Is it so hard to make people migrate?

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

2 things that help me very much: perject for GNU Emacs, and Contexts + usual workspaces for Xmonad.

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

can't believe it got to this point. heads on rust foundation seem more self-interested than community-oriented.

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

I had listened to it when you originally posted and had made some annotations, commenting some now

Lamport talks about all this "developers shall be ENGINEERS and know their math", BUT most software engineering positions are not engineering and even less approach classical engineering. BECAUSE why spend effort learning math WHEN one can use all constructed abstractions to have a greater return on investment with less effort? I do not think people who do high level development need to know their math that they won't use anyway; but those jobs will likely be automated earlier.

I think, of course, actual engineering comes down when one needs to do lower level development, depending on project domain, or things that need to be correct. I mean, systems cannot be actually 100% correct including the fact chips are proprietary so no way to fully verify.

Interesting to mention on the clocks paper and mention on actual implicit insight is on system's components using the same commands/inputs/computations to have a same state machine, besides consensus algorithm for fault tolerance, and the mutual exclusion algorithm.

And the ideas coming up when working on problems.

yuu

1 year ago

•

100%

yuu

1 year ago

•

100%

KDE Connect has been very unreliable to me. I'm using magic wormhole now.

yuu

2 years ago

•

100%

yuu

2 years ago

•

100%

This dormant black hole is about 10 times more massive than the sun and is located about 1,600 light-years away in the constellation Ophiuchus, making it three times closer to Earth than the previous record holder.

yuu

2 years ago

•

100%

yuu

2 years ago

•

100%

Artificial constellations that pollute the night sky... I remember there was a popular paper on the negative effects of this.

yuu

2 years ago

•

100%

yuu

2 years ago

•

100%

The consequence of Docker Compose is that most people use podman containers the same way as they use docker containers. You first create the container, and then you figure a way out, how to restart the container on every reboot. And this approach does not work with podman auto-update, because it requires this process to be upside-down … Wait upside-down? … What do I mean with that?

The canonical way of starting podman containers at boottime is the creation of custom systemd units for them. This is cool and allows to have daemonless, independent containers running. podman itself provides a handy way of creating those system units, e.g. here for a new nginx container:

interesting... as far as i remember podman official docs say nothing about that; or at least i do not remember seeing anything. so i ended up using compose with the unofficial podman-compose, which ended up being very frustrating.

so i thought it was primarily meant for OpenShift instead.

maybe i'll give podman another try now that i'm aware of that systemd integation.

> This project aims at providing nightly builds of all official rust mdbooks in epub format. It is born out of the difficulty I encountered when starting my rust apprenticeship to find recent ebook versions of the official documentation. > > If you encounter any issue, have any suggestion or would like to improve this site and/or its content, please go to https://github.com/dieterplex/rust-ebookshelf/ and file an issue or create a pull request.

building.nubank.com.br

building.nubank.com.br

Always interesting to read real world applications of the concepts. Nubank's framework is a mix of storytelling, design thinking, empathy mapping, ... > storytelling can be used to develop better products around the idea of understanding and executing the “why’s” and “how’s” of the products. Using the techniques related to it, such as research, we can simplify the way we pass messages to the user. Nubank's framework has three phases: > 1. Understanding: properly understand the customer problem. After that, we can create our first storyboard. When working on testing with users, a framework is good to guarantee that we’re considering all of our ideas. > 2. Defining: how we’re going to communicate the narrative. As you can see, the storyboard is very strategic when it comes to helping influence the sequence of events and craft the narrative. Here the "movie script" is done. Now make de "movie's scene". > 3. Designing: translate the story you wrote, because, before you started doing anything, you already knew what you were going to do. Just follow what you have planned... Understanding the pain points correctly, we also start to understand our users actions and how they think. When we master this, we can help the customer take the actions in the way that we want them to, to help them to achieve their goals. > 4. Call to action: By knowing people’s goals and paint points, whether emotional or logistical, we can anticipate their needs.... guarantee that it is aligned with the promises we made to the customer, especially when it comes to marketing. Ask yourself if what you’re saying in the marketing campaigns are really what will be shown in the product.

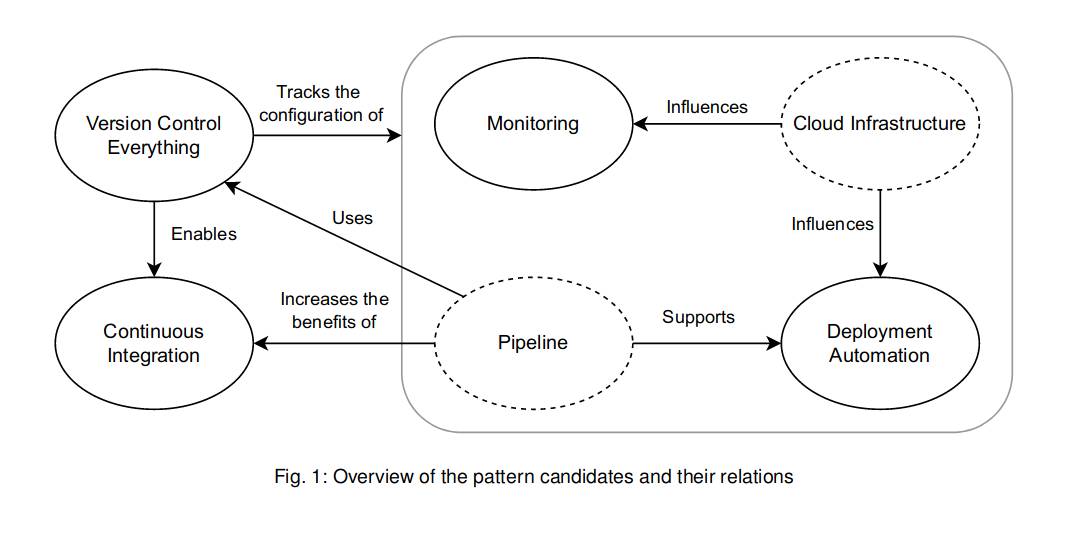

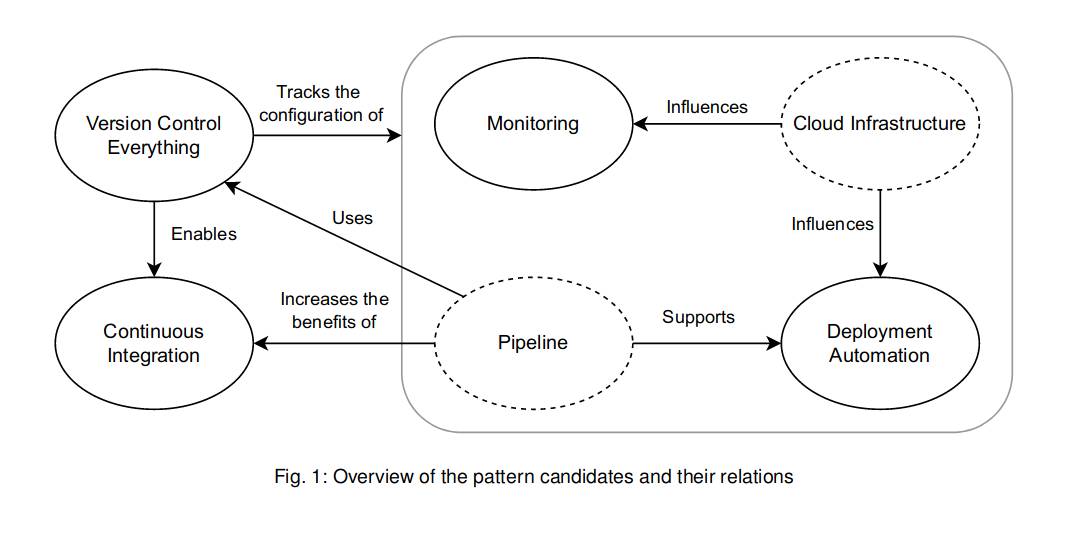

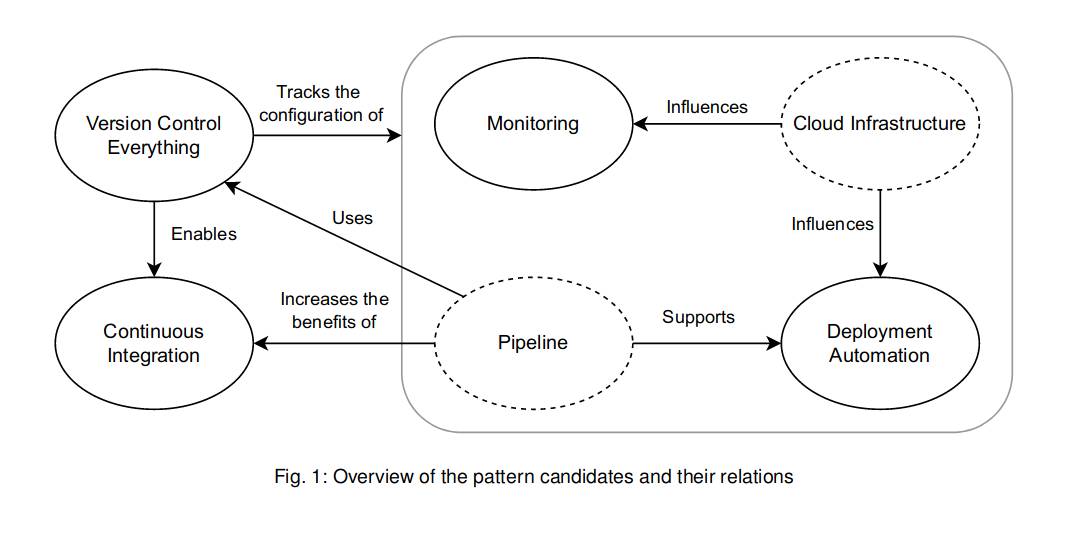

cross-posted from [!softwareengineering@group.lt](https://group.lt/c/softwareengineering): https://group.lt/post/46385 > Adopting DevOps practices is nowadays a recurring task in the industry. DevOps is a set of practices intended to reduce the friction between the software development (Dev) and the IT operations (Ops), resulting in higher quality software and a shorter development lifecycle. Even though many resources are talking about DevOps practices, they are often inconsistent with each other on the best DevOps practices. Furthermore, they lack the needed detail and structure for beginners to the DevOps field to quickly understand them. > > In order to tackle this issue, this paper proposes **four foundational DevOps patterns: Version Control Everything, Continuous Integration, Deployment Automation, and Monitoring**. The patterns are both detailed enough and structured to be easily reused by practitioners and flexible enough to accommodate different needs and quirks that might arise from their actual usage context. Furthermore, the **patterns are tuned to the DevOps principle of Continuous Improvement by containing metrics so that practitioners can improve their pattern implementations**. --- The article does not describes but actually identified and included 2 other patterns in addition to the four above (so actually 6): - **Cloud Infrastructure**, which includes cloud computing, scaling, infrastructure as a code, ... - **Pipeline**, "important for implementing Deployment Automation and Continuous Integration, and segregating it from the others allows us to make the solutions of these patterns easier to use, namely in contexts where a pipeline does not need to be present."  The paper is interesting for the following structure in describing the patterns: > - Name: An evocative name for the pattern. > - Context: Contains the context for the pattern providing a background for the problem. > - Problem: A question representing the problem that the pattern intends to solve. > - Forces: A list of forces that the solution must balance out. > - Solution: A detailed description of the solution for our pattern’s problem. > - Consequences: The implications, advantages and trade-offs caused by using the pattern. > - Related Patterns: Patterns which are connected somehow to the one being described. > - Metrics: A set of metrics to measure the effectiveness of the pattern’s solution implementation.

cross-posted from [!softwareengineering@group.lt](https://group.lt/c/softwareengineering): https://group.lt/post/46385 > Adopting DevOps practices is nowadays a recurring task in the industry. DevOps is a set of practices intended to reduce the friction between the software development (Dev) and the IT operations (Ops), resulting in higher quality software and a shorter development lifecycle. Even though many resources are talking about DevOps practices, they are often inconsistent with each other on the best DevOps practices. Furthermore, they lack the needed detail and structure for beginners to the DevOps field to quickly understand them. > > In order to tackle this issue, this paper proposes **four foundational DevOps patterns: Version Control Everything, Continuous Integration, Deployment Automation, and Monitoring**. The patterns are both detailed enough and structured to be easily reused by practitioners and flexible enough to accommodate different needs and quirks that might arise from their actual usage context. Furthermore, the **patterns are tuned to the DevOps principle of Continuous Improvement by containing metrics so that practitioners can improve their pattern implementations**. --- The article does not describes but actually identified and included 2 other patterns in addition to the four above (so actually 6): - **Cloud Infrastructure**, which includes cloud computing, scaling, infrastructure as a code, ... - **Pipeline**, "important for implementing Deployment Automation and Continuous Integration, and segregating it from the others allows us to make the solutions of these patterns easier to use, namely in contexts where a pipeline does not need to be present."  The paper is interesting for the following structure in describing the patterns: > - Name: An evocative name for the pattern. > - Context: Contains the context for the pattern providing a background for the problem. > - Problem: A question representing the problem that the pattern intends to solve. > - Forces: A list of forces that the solution must balance out. > - Solution: A detailed description of the solution for our pattern’s problem. > - Consequences: The implications, advantages and trade-offs caused by using the pattern. > - Related Patterns: Patterns which are connected somehow to the one being described. > - Metrics: A set of metrics to measure the effectiveness of the pattern’s solution implementation.

> Adopting DevOps practices is nowadays a recurring task in the industry. DevOps is a set of practices intended to reduce the friction between the software development (Dev) and the IT operations (Ops), resulting in higher quality software and a shorter development lifecycle. Even though many resources are talking about DevOps practices, they are often inconsistent with each other on the best DevOps practices. Furthermore, they lack the needed detail and structure for beginners to the DevOps field to quickly understand them. > > In order to tackle this issue, this paper proposes **four foundational DevOps patterns: Version Control Everything, Continuous Integration, Deployment Automation, and Monitoring**. The patterns are both detailed enough and structured to be easily reused by practitioners and flexible enough to accommodate different needs and quirks that might arise from their actual usage context. Furthermore, the **patterns are tuned to the DevOps principle of Continuous Improvement by containing metrics so that practitioners can improve their pattern implementations**. --- The article does not describes but actually identified and included 2 other patterns in addition to the four above (so actually 6): - **Cloud Infrastructure**, which includes cloud computing, scaling, infrastructure as a code, ... - **Pipeline**, "important for implementing Deployment Automation and Continuous Integration, and segregating it from the others allows us to make the solutions of these patterns easier to use, namely in contexts where a pipeline does not need to be present."  The paper is interesting for the following structure in describing the patterns: > - Name: An evocative name for the pattern. > - Context: Contains the context for the pattern providing a background for the problem. > - Problem: A question representing the problem that the pattern intends to solve. > - Forces: A list of forces that the solution must balance out. > - Solution: A detailed description of the solution for our pattern’s problem. > - Consequences: The implications, advantages and trade-offs caused by using the pattern. > - Related Patterns: Patterns which are connected somehow to the one being described. > - Metrics: A set of metrics to measure the effectiveness of the pattern’s solution implementation.

[!softwareengineering@group.lt](https://group.lt/c/softwareengineering) We post and discuss software engineering related information: be it programming/construction, UX/UI, software architecture, DevSecOps, software economics, research, management, requirements, AI, ... It is meant as a serious, focused community that strives for sharing content from reliable sources, and free/open access as well.

www.nngroup.com

www.nngroup.com

Attention economy is a pretty important concept in today's socioeconomic systems. Here an article by Nielsen Norman Group explaining it a bit in the context of digital products. > Digital products are competing for users’ limited attention. The modern economy increasingly revolves around the human attention span and how products capture that attention. > > Attention is one of the most valuable resources of the digital age. For most of human history, access to information was limited. Centuries ago many people could not read and education was a luxury. Today we have access to information on a massive scale. Facts, literature, and art are available (often for free) to anyone with an internet connection. > > We are presented with a wealth of information, but we have the same amount of mental processing power as we have always had. The number of minutes has also stayed exactly the same in every day. Today attention, not information, is the limiting factor. There are many scientific works on the topic; here some queries in computer science / software engineering databases: - [IEEE Xplore](https://ieeexplore.ieee.org/search/searchresult.jsp?newsearch=true&queryText=%22attention%20economy%22) - [ACM DL](https://dl.acm.org/action/doSearch?fillQuickSearch=false&target=advanced&expand=dl&AllField=AllField%3A%28%22attention+economy%22%29) - [arXiv](https://arxiv.org/search/?query=%22attention+economy%22&searchtype=all&source=header) Another related article by NN/g: [The Vortex: Why Users Feel Trapped in Their Devices](https://www.nngroup.com/articles/device-vortex/)

github.com

github.com

it is a week since I have been using Perject as an alternative/replacement to packages like tabspaces and persp. Perject is much better than those, and I have yet to experience a bug. The workflow is: 1. create a collection of projects: perject-open-collection 2. create a project under a collection: perject-switch 3. add buffer to project: perject-add-buffer-to-project Then can create collections, projects, ... and switch between them from any frame. It does auto save state, and I found it very good in reloading the saved collections/projects; I have not experienced any bug with it while I would experience much bugs with persp... Also Perject integrates with GNU Emacs built-ins for all that, such as desktop, project.el, tab-bar, ... The author, overideal, released it recently, but it already is one my favorite Emacs packages. Really worth it trying it out. My config if anyone wants to try it out https://codeberg.org/yymacs/yymacs/src/branch/main/yyuu/mod/yyuu-mod-emacs-uix-space.el#L43-L111

modeling-languages.com

modeling-languages.com

cross-posted from [!softwareengineering@group.lt](https://group.lt/c/softwareengineering): https://group.lt/post/46120 - Google: [AppSheet](https://appsheet.com/) - Apple: [SwiftUI](https://developer.apple.com/xcode/swiftui/) - Microsoft: [PowerApps](https://powerapps.microsoft.com) - Amazon: [HoneyCode](https://www.honeycode.aws/), [Amplify Studio](https://aws.amazon.com/amplify/studio/)

modeling-languages.com

modeling-languages.com

cross-posted from [!softwareengineering@group.lt](https://group.lt/c/softwareengineering): https://group.lt/post/46120 - Google: [AppSheet](https://appsheet.com/) - Apple: [SwiftUI](https://developer.apple.com/xcode/swiftui/) - Microsoft: [PowerApps](https://powerapps.microsoft.com) - Amazon: [HoneyCode](https://www.honeycode.aws/), [Amplify Studio](https://aws.amazon.com/amplify/studio/)

modeling-languages.com

modeling-languages.com

- Google: [AppSheet](https://appsheet.com/) - Apple: [SwiftUI](https://developer.apple.com/xcode/swiftui/) - Microsoft: [PowerApps](https://powerapps.microsoft.com) - Amazon: [HoneyCode](https://www.honeycode.aws/), [Amplify Studio](https://aws.amazon.com/amplify/studio/)

> ## Highlights > > - Software development research is divided into two incommensurable paradigms. > - The **Rational Paradigm** emphasizes problem solving, planning and methods. > - The **Empirical Paradigm** emphasizes problem framing, improvisation and practices. > - The Empirical Paradigm is based on data and science; the Rational Paradigm is based on assumptions and opinions. > - The Rational Paradigm undermines the credibility of the software engineering research community. --- Very good paper by @paulralph@mastodon.acm.org discussing Rational Paradigm (non emprirical) and Empiriral Paradigm (evidence-based, scientific) in software engineering. Historically the Rational Paradigm has dominated both the software engineering research and industry, which is also evident in software engineering international standards, bodies of knowledge (e.g. IEEE CS SWEBOK), curriculum guidelines, ... Basically, much of the "standard" knowledge and mainstream literature has no basis in science, but "guru" knowledge. But people rarely follow rational approaches successfully or faithfully, which suggest using detailed plans, ... It also argues that currently software engineering is at level 2 in a "informal scale of empirical commitment". In comparison, medicine is at level 4 (greatest level in empirical commitment).  > I think SE is at level two. Most top venues expect empirical data; however, that data often does not directly address effectiveness. Empirical findings and rigorous studies compete with non-empirical concepts and anecdotal evidence. For example, some reviews of a recent paper on software development waste [168] criticized it for its limited contribution over previous work [169], even though the previous work was based entirely on anecdotal evidence and the new paper was based on a rigorous empirical study. Meanwhile, many specialist and second-tier venues do not require empirical data at all. And concludes with some implications > 1. Much research involves developing new and improved development methods, tools, models, standards and techniques. Researchers who are unwittingly immersed in the Rational Paradigm may create artifacts based on unstated Rational-Paradigm assumptions, limiting their applicability and usefulness. For instance, the project management framework PRINCE2 prescribes that the project board (who set project goals) should not be the same people as project team (who design the system [108]). This is based on the Rationalist assumption that problems are given, and inhibits design coevolution. > > 2. Having two paradigms in the same academic community causes miscommunication [4], which undermines consensus and hinders scientific progress [171]. The fundamental rationalist critique of the Empirical Paradigm is that it is patently obvious that employing a more systematic, methodical, logical process should improve outcomes [7], [23], [119], [172], [173]. The fundamental empiricist critique of the Rational Paradigm is that there is no convincing evidence that following more systematic, methodical, logical processes is helpful or even possible [3], [5], [9], [12]. As the Rational Paradigm is grounded in Rationalist epistemology, its adherents are skeptical of empirical evidence [23]; similarly, as the Empirical Paradigm is grounded in empiricist epistemology, its adherents are skeptical of appeals to intuition and common sense [5]. In other words, scholars in different paradigms talk past each other and struggle to communicate or find common ground. > > 3. Many reasonable professionals, who would never buy a homeopathic remedy (because a few testimonials obviously do not constitute sound evidence of effectiveness) will adopt a software method or practice based on nothing other than a few testimonials [174], [175]. Both practitioners and researchers should demand direct empirical evaluation of the effectiveness of all proposed methods, tools, models, standards and techniques (cf. [111], [176]). When someone argues that basic standards of evidence should not apply to their research, call this what it is: the special pleading fallacy [177]. Meanwhile, peer reviewers should avoid criticizing or rejecting empirical work for contradicting non-empirical legacy concepts. > > 4. The Rational Paradigm leads professionals “to demand up-front statements of design requirements” and “to make contracts with one another on [this] basis”, increasing risk [5]. The Empirical Paradigm reveals why: as the goals and desiderata coevolve with the emerging software product, many projects drift away from their contracts. This drift creates a paradox for the developers: deliver exactly what the contract says for limited stakeholder benefits (and possible harms), or maximize stakeholder benefits and risk breach-of-contract litigation. Firms should therefore consider alternative arrangements including in-house development or ongoing contracts. > > 5. The Rational Paradigm contributes to the well-known tension between managers attempting to drive projects through cost estimates and software professionals who cannot accurately estimate costs [88]. Developers underestimate effort by 30–40% on average [178] as they rarely have sufficient information to gauge project difficulty [18]. The Empirical Paradigm reveals that design is an unpredictable, creative process, for which accounting-based control is ineffective. > > 6. Rational Paradigm assumptions permeate IS2010 [70] and SE2014 [179], the undergraduate model curricula for information systems and software engineering, respectively. Both curricula discuss requirements and lifecycles in depth; neither mention Reflection-in-Action, coevolution, amethodical development or any theories of SE or design (cf. [180]). Nonempirical legacy concepts including the Waterfall Model and Project Triangle should be dropped from curricula to make room for evidenced-based concepts, models and theories, just like in all of the other social and applied sciences. --- > ## Abstract > The most profound conflict in software engineering is not between positivist and interpretivist research approaches or Agile and Heavyweight software development methods, but between the Rational and Empirical Design Paradigms. The Rational and Empirical Paradigms are disparate constellations of beliefs about how software is and should be created. The Rational Paradigm remains dominant in software engineering research, standards and curricula despite being contradicted by decades of empirical research. The Rational Paradigm views analysis, design and programming as separate activities despite empirical research showing that they are simultaneous and inextricably interconnected. The Rational Paradigm views developers as executing plans despite empirical research showing that plans are a weak resource for informing situated action. The Rational Paradigm views success in terms of the Project Triangle (scope, time, cost and quality) despite empirical researching showing that the Project Triangle omits critical dimensions of success. The Rational Paradigm assumes that analysts elicit requirements despite empirical research showing that analysts and stakeholders co-construct preferences. The Rational Paradigm views professionals as using software development methods despite empirical research showing that methods are rarely used, very rarely used as intended, and typically weak resources for informing situated action. This article therefore elucidates the Empirical Design Paradigm, an alternative view of software development more consistent with empirical evidence. Embracing the Empirical Paradigm is crucial for retaining scientific legitimacy, solving numerous practical problems and improving software engineering education.

yuu

2 years ago

•

100%

yuu

2 years ago

•

100%

Oh I misread; thought it enabled following fediverse users from within lemmy, but now i see it is actually the other way around. Thank you for clarifying!

yuu

2 years ago

•

100%

yuu

2 years ago

•

100%

Lemmy users can now be followed. Just visit a user profile from another platform like Mastodon, and click the follow button, then you will receive new posts and comments in the timeline.

does an admin needs to enable the follow button? it is not appearing for me.

yuu

2 years ago

•

100%

yuu

2 years ago

•

100%

Wonderful! Thanks contributors for all the work!

yuu

2 years ago

•

100%

yuu

2 years ago

•

100%

I know right? JWST is giving us a pretty exciting moment in astronomy. Also many beautiful images🌠

www.quantamagazine.org

www.quantamagazine.org

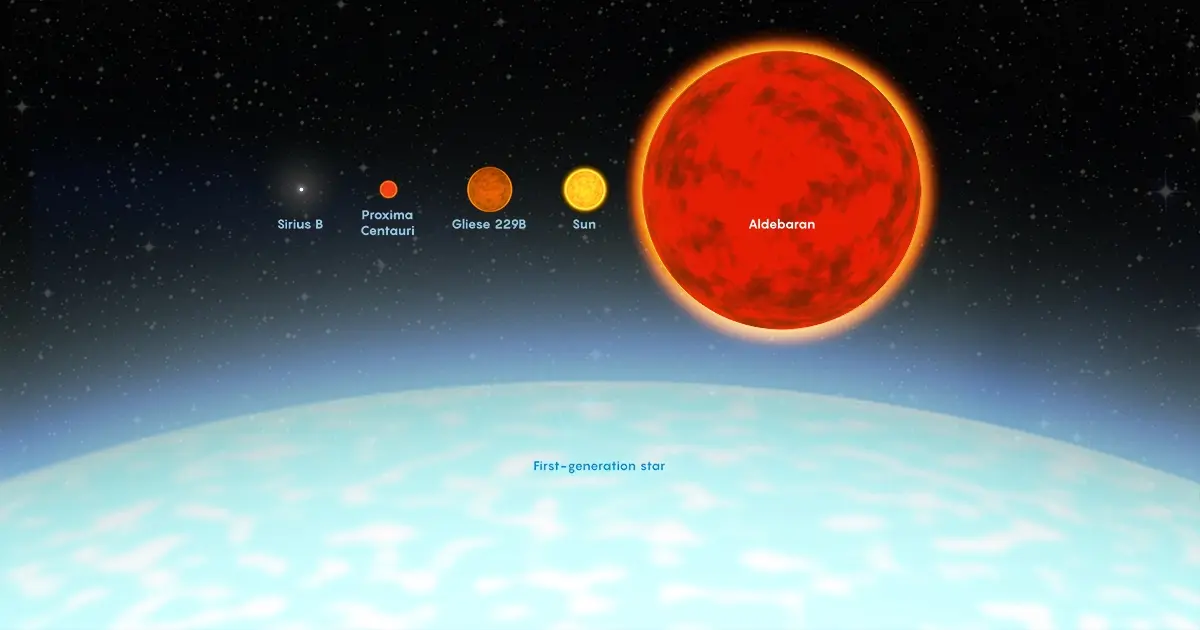

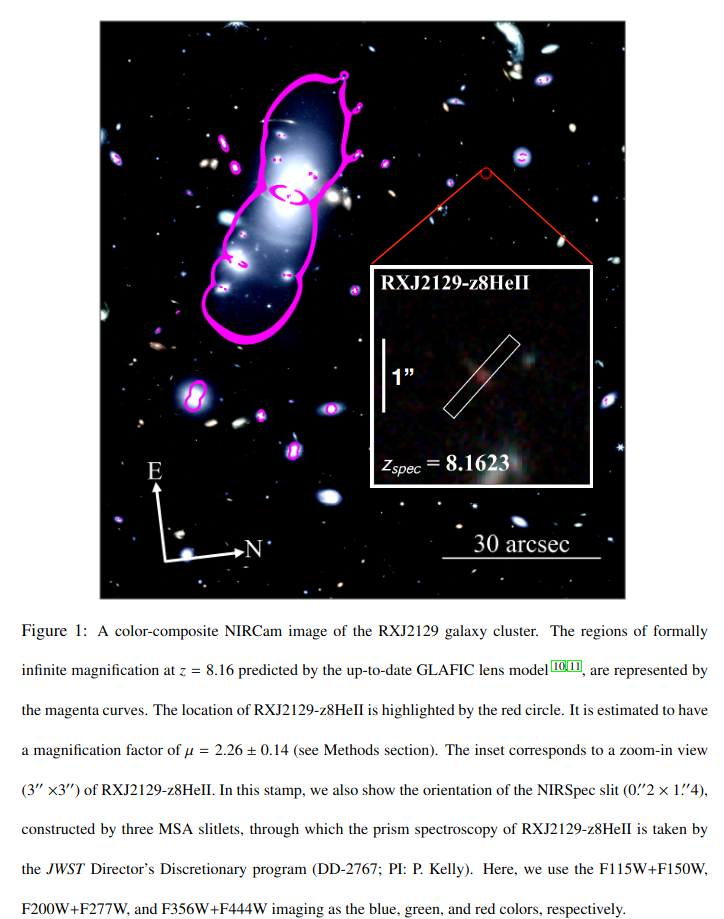

cross-posted from: https://group.lt/post/46053 > A group of astronomers poring over data from the James Webb Space Telescope (JWST) has glimpsed light from ionized helium in a distant galaxy, which could indicate the presence of the **universe’s very first generation of stars**. > > These long-sought, inaptly named “Population III” stars would have been ginormous balls of hydrogen and helium sculpted from the universe’s primordial gas. Theorists started imagining these first fireballs in the 1970s, hypothesizing that, after short lifetimes, they exploded as supernovas, forging heavier elements and spewing them into the cosmos. That star stuff later gave rise to Population II stars more abundant in heavy elements, then even richer Population I stars like our sun, as well as planets, asteroids, comets and eventually life itself. > > About 400,000 years after the Big Bang, electrons, protons and neutrons settled down enough to combine into hydrogen and helium atoms. As the temperature kept dropping, dark matter gradually clumped up, pulling the atoms with it. Inside the clumps, hydrogen and helium were squashed by gravity, condensing into enormous balls of gas until, once the balls were dense enough, nuclear fusion suddenly ignited in their centers. The first stars were born. > > stars in our galaxy into types I and II in 1944. The former includes our sun and other metal-rich stars; the latter contains older stars made of lighter elements. The idea of Population III stars entered the literature decades later... Their heat or explosions could have reionized the universe  More information: - https://arxiv.org/abs/2212.04476

yuu

2 years ago

•

100%

yuu

2 years ago

•

100%

Not the package managers as I understand, but the service providers providing the applications; so it would include e.g. everyone hosting package archive mirrors. This all makes no sense, because the Internet, which runs Linux, would basically stagnate.

yuu

2 years ago

•

100%

yuu

2 years ago

•

100%

Article 6 of the law requires all "software application stores" to:

- Assess whether each service provided by each software application enables human-to-human communication

- Verify whether each user is over or under the age of 17

- Prevent users under 17 from installing such communication software

It may seem unbelievable that the authors of the law didn't think about this but it is not that surprising considering this is just one of the many gigantic consequences of this sloppily thought out and written law.

That law is a big document; would have been helpful if Mullvad's article directly cited/referenced as for us to verify some of that.

www.quantamagazine.org

www.quantamagazine.org

> A group of astronomers poring over data from the James Webb Space Telescope (JWST) has glimpsed light from ionized helium in a distant galaxy, which could indicate the presence of the **universe’s very first generation of stars**. > > These long-sought, inaptly named “Population III” stars would have been ginormous balls of hydrogen and helium sculpted from the universe’s primordial gas. Theorists started imagining these first fireballs in the 1970s, hypothesizing that, after short lifetimes, they exploded as supernovas, forging heavier elements and spewing them into the cosmos. That star stuff later gave rise to Population II stars more abundant in heavy elements, then even richer Population I stars like our sun, as well as planets, asteroids, comets and eventually life itself. > > About 400,000 years after the Big Bang, electrons, protons and neutrons settled down enough to combine into hydrogen and helium atoms. As the temperature kept dropping, dark matter gradually clumped up, pulling the atoms with it. Inside the clumps, hydrogen and helium were squashed by gravity, condensing into enormous balls of gas until, once the balls were dense enough, nuclear fusion suddenly ignited in their centers. The first stars were born. > > stars in our galaxy into types I and II in 1944. The former includes our sun and other metal-rich stars; the latter contains older stars made of lighter elements. The idea of Population III stars entered the literature decades later... Their heat or explosions could have reionized the universe  More information: - https://arxiv.org/abs/2212.04476

yuu

2 years ago

•

100%

yuu

2 years ago

•

100%

Yeah Lemmy is pretty good on that and overall as well. I wish more people would move from the popular proprietary/centralized forums alike to here. Maybe it just needs more word of mouth...

yuu

2 years ago

•

100%

yuu

2 years ago

•

100%

Nice to see you and your project here as well✨✨✨

It is pretty useful! Thanks!

PS: Also worth sharing on !nixos@lemmy.ml

There are people/researchers from ACM and so on sharing pretty interesting, useful content about software engineering.

yuu

2 years ago

•

100%

yuu

2 years ago

•

100%

Wow the rendering is much better/faster now 🌠

www.zdnet.com

www.zdnet.com

cross-posted from: https://group.lt/post/44860 > Developers across government and industry should commit to using memory safe languages for new products and tools, and identify the most critical libraries and packages to shift to memory safe languages, according to a study from Consumer Reports. > >The US nonprofit, which is known for testing consumer products, **asked what steps can be taken to help usher in "memory safe" languages, like Rust**, over options such as C and C++. Consumer Reports said it wanted to address "industry-wide threats that cannot be solved through user behavior or even consumer choice" and it identified "memory unsafety" as one such issue. > >The [report](https://advocacy.consumerreports.org/research/report-future-of-memory-safety/), Future of Memory Safety, looks at range of issues, including challenges in building memory safe language adoption within universities, levels of distrust for memory safe languages, introducing memory safe languages to code bases written in other languages, and also incentives and public accountability. More information: - https://advocacy.consumerreports.org/research/report-future-of-memory-safety/ - https://advocacy.consumerreports.org/wp-content/uploads/2023/01/Memory-Safety-Convening-Report.pdf

www.zdnet.com

www.zdnet.com

cross-posted from: https://group.lt/post/44860 > Developers across government and industry should commit to using memory safe languages for new products and tools, and identify the most critical libraries and packages to shift to memory safe languages, according to a study from Consumer Reports. > >The US nonprofit, which is known for testing consumer products, **asked what steps can be taken to help usher in "memory safe" languages, like Rust**, over options such as C and C++. Consumer Reports said it wanted to address "industry-wide threats that cannot be solved through user behavior or even consumer choice" and it identified "memory unsafety" as one such issue. > >The [report](https://advocacy.consumerreports.org/research/report-future-of-memory-safety/), Future of Memory Safety, looks at range of issues, including challenges in building memory safe language adoption within universities, levels of distrust for memory safe languages, introducing memory safe languages to code bases written in other languages, and also incentives and public accountability. More information: - https://advocacy.consumerreports.org/research/report-future-of-memory-safety/ - https://advocacy.consumerreports.org/wp-content/uploads/2023/01/Memory-Safety-Convening-Report.pdf

www.zdnet.com

www.zdnet.com

> Developers across government and industry should commit to using memory safe languages for new products and tools, and identify the most critical libraries and packages to shift to memory safe languages, according to a study from Consumer Reports. > >The US nonprofit, which is known for testing consumer products, **asked what steps can be taken to help usher in "memory safe" languages, like Rust**, over options such as C and C++. Consumer Reports said it wanted to address "industry-wide threats that cannot be solved through user behavior or even consumer choice" and it identified "memory unsafety" as one such issue. > >The [report](https://advocacy.consumerreports.org/research/report-future-of-memory-safety/), Future of Memory Safety, looks at range of issues, including challenges in building memory safe language adoption within universities, levels of distrust for memory safe languages, introducing memory safe languages to code bases written in other languages, and also incentives and public accountability. More information: - https://advocacy.consumerreports.org/research/report-future-of-memory-safety/ - https://advocacy.consumerreports.org/wp-content/uploads/2023/01/Memory-Safety-Convening-Report.pdf

cross-posted from c/softwareengineering@group.lt: https://group.lt/post/44632 > This kind of scaling issue is new to Codeberg (a nonprofit free software project), but not to the world. All projects on earth likely went through this at a certain point or will experience it in the future. > > When people like me talk about scaling... It's about increasing computing power, distributed storage, replicated databases and so on. There are all kinds of technology available to solve scaling issues. So why, damn, is Codeberg still having performance issues from time to time? > > ...we face the "worst" kind of scaling issue in my perception. That is, if you don't see it coming (e.g. because the software gets slower day by day, or because you see how the storage pool fill up). Instead, it appears out of the blue. > > **The hardest scaling issue is: scaling human power.** > > Configuration, Investigation, Maintenance, User Support, Communication – all require some effort, and it's not easy to automate. In many cases, automation would consume even more human resources to set up than we have. > > There are no paid night shifts, not even payment at all. Still, people have become used to the always-available guarantees, and demand the same from us: Occasional slowness in the evening of the CET timezone? Unbearable! > >I do understand the demand. We definitely aim for a better service than we sometimes provide. However, sometimes, the frustration of angry social-media-guys carries me away... > > two primary blockers that prevent scaling human resources. The first one is: trust. Because we can't yet afford hiring employees that work on tasks for a defined amount of time, work naturally has to be distributed over many volunteers with limited time commitment... second problem is a in part technical. Unlike major players, which have nearly unlimited resources available to meet high demand, scaling Codeberg's systems... TLDR: sustainability issues for scaling because Codeberg is a nonprofit with much limited resources, mainly human resources, in face of high demand. Non-paid volunteers do all the work. So needs more people working as volunteers, and needs more money.

cross-posted from c/softwareengineering@group.lt: https://group.lt/post/44632 > This kind of scaling issue is new to Codeberg (a nonprofit free software project), but not to the world. All projects on earth likely went through this at a certain point or will experience it in the future. > > When people like me talk about scaling... It's about increasing computing power, distributed storage, replicated databases and so on. There are all kinds of technology available to solve scaling issues. So why, damn, is Codeberg still having performance issues from time to time? > > ...we face the "worst" kind of scaling issue in my perception. That is, if you don't see it coming (e.g. because the software gets slower day by day, or because you see how the storage pool fill up). Instead, it appears out of the blue. > > **The hardest scaling issue is: scaling human power.** > > Configuration, Investigation, Maintenance, User Support, Communication – all require some effort, and it's not easy to automate. In many cases, automation would consume even more human resources to set up than we have. > > There are no paid night shifts, not even payment at all. Still, people have become used to the always-available guarantees, and demand the same from us: Occasional slowness in the evening of the CET timezone? Unbearable! > >I do understand the demand. We definitely aim for a better service than we sometimes provide. However, sometimes, the frustration of angry social-media-guys carries me away... > > two primary blockers that prevent scaling human resources. The first one is: trust. Because we can't yet afford hiring employees that work on tasks for a defined amount of time, work naturally has to be distributed over many volunteers with limited time commitment... second problem is a in part technical. Unlike major players, which have nearly unlimited resources available to meet high demand, scaling Codeberg's systems... TLDR: sustainability issues for scaling because Codeberg is a nonprofit with much limited resources, mainly human resources, in face of high demand. Non-paid volunteers do all the work. So needs more people working as volunteers, and needs more money.

cross-posted from c/softwareengineering@group.lt: https://group.lt/post/44632 > This kind of scaling issue is new to Codeberg (a nonprofit free software project), but not to the world. All projects on earth likely went through this at a certain point or will experience it in the future. > > When people like me talk about scaling... It's about increasing computing power, distributed storage, replicated databases and so on. There are all kinds of technology available to solve scaling issues. So why, damn, is Codeberg still having performance issues from time to time? > > ...we face the "worst" kind of scaling issue in my perception. That is, if you don't see it coming (e.g. because the software gets slower day by day, or because you see how the storage pool fill up). Instead, it appears out of the blue. > > **The hardest scaling issue is: scaling human power.** > > Configuration, Investigation, Maintenance, User Support, Communication – all require some effort, and it's not easy to automate. In many cases, automation would consume even more human resources to set up than we have. > > There are no paid night shifts, not even payment at all. Still, people have become used to the always-available guarantees, and demand the same from us: Occasional slowness in the evening of the CET timezone? Unbearable! > >I do understand the demand. We definitely aim for a better service than we sometimes provide. However, sometimes, the frustration of angry social-media-guys carries me away... > > two primary blockers that prevent scaling human resources. The first one is: trust. Because we can't yet afford hiring employees that work on tasks for a defined amount of time, work naturally has to be distributed over many volunteers with limited time commitment... second problem is a in part technical. Unlike major players, which have nearly unlimited resources available to meet high demand, scaling Codeberg's systems... TLDR: sustainability issues for scaling because Codeberg is a nonprofit with much limited resources, mainly human resources, in face of high demand. Non-paid volunteers do all the work. So needs more people working as volunteers, and needs more money.

> This kind of scaling issue is new to Codeberg (a nonprofit free software project), but not to the world. All projects on earth likely went through this at a certain point or will experience it in the future. > > When people like me talk about scaling... It's about increasing computing power, distributed storage, replicated databases and so on. There are all kinds of technology available to solve scaling issues. So why, damn, is Codeberg still having performance issues from time to time? > > ...we face the "worst" kind of scaling issue in my perception. That is, if you don't see it coming (e.g. because the software gets slower day by day, or because you see how the storage pool fill up). Instead, it appears out of the blue. > > **The hardest scaling issue is: scaling human power.** > > Configuration, Investigation, Maintenance, User Support, Communication – all require some effort, and it's not easy to automate. In many cases, automation would consume even more human resources to set up than we have. > > There are no paid night shifts, not even payment at all. Still, people have become used to the always-available guarantees, and demand the same from us: Occasional slowness in the evening of the CET timezone? Unbearable! > >I do understand the demand. We definitely aim for a better service than we sometimes provide. However, sometimes, the frustration of angry social-media-guys carries me away... > > two primary blockers that prevent scaling human resources. The first one is: trust. Because we can't yet afford hiring employees that work on tasks for a defined amount of time, work naturally has to be distributed over many volunteers with limited time commitment... second problem is a in part technical. Unlike major players, which have nearly unlimited resources available to meet high demand, scaling Codeberg's systems... TLDR: sustainability issues for scaling because Codeberg is a nonprofit with much limited resources, mainly human resources, in face of high demand. Non-paid volunteers do all the work. So needs more people working as volunteers, and needs more money.

lawsofux.com

lawsofux.com

developers.googleblog.com

developers.googleblog.com

> How could you use Android, Firebase, TensorFlow, Google Cloud, Flutter, or any of your favorite Google technologies to promote employment for all, economic growth, and climate action? > > Join us to build solutions for one or more of the United Nations 17 Sustainable Development Goals. These goals were agreed upon in 2015 by all 193 United Nations Member States and aim to end poverty, ensure prosperity, and protect the planet by 2030. For students. Mostly interesting for promoting the sustainable goals.